Control a video player with hand gestures

- Introduction

- How to control a web player with hand gestures

- Problem Breakdown

- Part 1: Recognizing the hand pose

- Part 2: Draw with a hand

- Part 3: Recognize symbols

- Part 4: Send player commands

- Conclusion

- References

Introduction

During a quarterly hackathon (2022 Q3) at Bitmovin, my team and I experimented with controlling the Bitmovin Player via video camera. We came up with the following ideas:

- issue commands to the player when detecting a certain object. For example, hold a cat into the camera to start/stop playback.

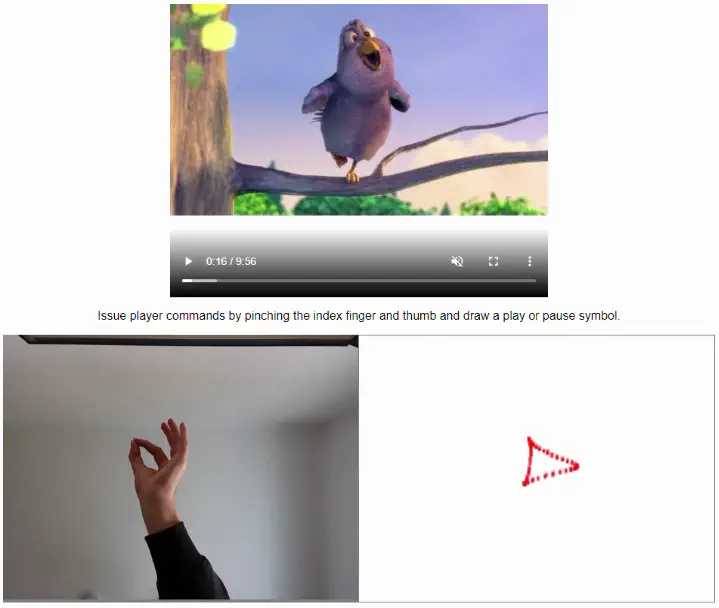

- use hand gestures such as swiping or drawing to control the player. For example, start video playback by pinching the index finger and thumb and draw a play symbol.

During the hackathon we only achieved the first point:

We split the webcam video stream into half, so that when a cat resides in the left half the program would send a play command, and issue pause when the cat is in the other half.

This worked fairly great and was quite amusing:

$\ldots$ but the second part was still open. Several after-work hours later I finally managed to produce something worthy to present:

How to control a web player with hand gestures

The Github project resides at github.com/peterpf/handgesture-input and relies on the following frameworks and libraries:

- Vite | Build system.

- React.js | JavaScript framework for developing web application.

- Tensorflow.js | Machine learning library.

- mediapipe/hands | Offers cross-platform ML solutions, including a model for hand pose detection.

The application is implemented with TypeScript + React as it allows for quick prototyping and the web platform offers everything we need:

- access to the host’s camera,

- a simple video player integration,

- and it supports major machine learning libraries (e.g. tensorflow).

Problem Breakdown

In order to find a solution to the problem, we can break it down into smaller sub-tasks:

- Run a hand posture detection algorithm to get granular information about finger positions.

- Define a hand pose for drawing (capture position) and store the positions in an array.

- Run a shape recognition algorithm on the input drawing.

- Map the recognized shapes to player commands.

Part 1: Recognizing the hand pose

The mediapipe/hands library is exactly what we need.

The implementation requires a base class which manages the video stream and the hand-pose detection:

class GestureInputService {

/**

* The video stream source.

*/

private videoStream: HTMLVideoElement;

/**

* Hand detection algorithm which extracts hand poses from the webcam feed.

*/

private detector: handPoseDetection.HandDetector | undefined;

public constructor(videoStream: HTMLVideoElement) {

this.videoStream = videoStream;

this.initHandDetection();

}

}

From the constructor we set up the hand-pose detection model with the initHandDetection method.

private async initHandDetection() {

const detectorConfig: handPoseDetection.MediaPipeHandsMediaPipeModelConfig = {

runtime: 'mediapipe',

solutionPath: '/',

maxHands: 1

};

const model = handPoseDetection.SupportedModels.MediaPipeHands;

this.detector = await handPoseDetection.createDetector(model, detectorConfig);

// Start listening to the webcam feed

const stream = await navigator.mediaDevices.getUserMedia({ video: true })

this.videoStream.srcObject = stream;

this.videoStream.addEventListener('loadeddata', () => {

this.listenToWebcamFeed();

});

}

This method is asynchronous because the pre-trained model is stored as a file which has several Megabytes and this takes some time.

Because we don’t want to load this over the internet, we can define the solutionPath to point to a local folder (/).

In order to make the file accessible over the web browser, we need to set the base/node_modules/@mediapipe/hands as Vite’s public folder.

Listen to the loadeddata event to get notified once the video stream has some data, and call the listenToWebcamFeed method.

Listing 1: Callback for processing webcam images.

private async listenToWebcamFeed() {

const hands = await this.detector!.estimateHands(this.videoStream, { flipHorizontal: true });

// Code for processing `hands` ...

// Recursively call listenToWebcamFeed to create a loop.

window.requestAnimationFrame(() => { this.listenToWebcamFeed() });

}

Invoking the listenToWebcamFeed runs the hand-pose detection algorithm.

Use the window.requestAnimationFrame method to set up a recursive call and run the detection-algorithm before every repaint of the browser.

According to developer.mozilla.org (last accessed 2022.09.25) the callback of the requestAnimationFrame is called several times a second:

The number of callbacks is usually 60 times per second, but will generally match the display refresh rate in most web browsers as per W3C recommendation.

With this we get regular updates on the hand-pose and conclude the first step.

Part 2: Draw with a hand

Now that we track the hand’s location in the webcam feed, we can use this to draw things on a canvas. But tracking the whole hand all the time results in neverending drawing. Therefore, we define a certain hand gesture as the drawing pose which our algorithm should pick up. A very basic one, also found in other software, is the pinching gesture:

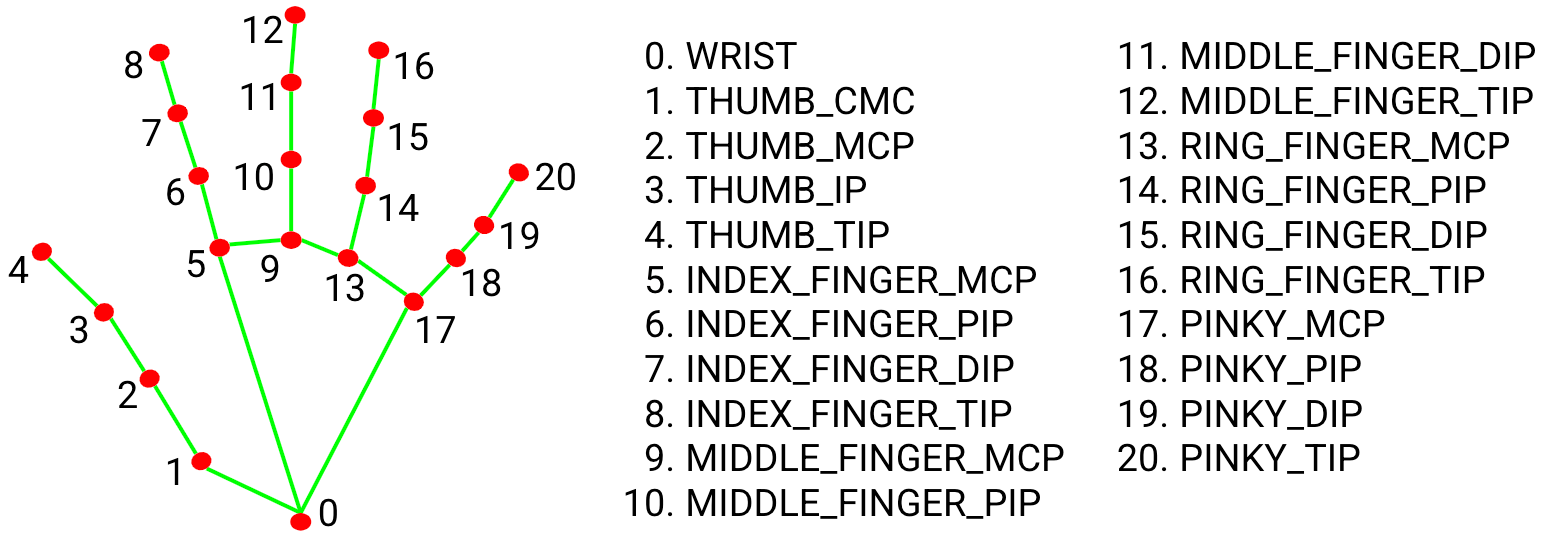

Its simplicity stems from only tracking the distance between the index finger and thumb, and because humands can do it without much effort. The mediapipe hand-pose detection algorithm provides 3D coordinates to the following landmarks for a hand:

The above implementation uses the keypoints 4 and 8 illustrated in Figure 1, which correspond to the index finger and thumb tip.

When the euclidean distance between the two points is below a certain threshold (thresholdForPinchingGesture).

The method returns the center coordinates of the two points, otherwise undefined.

private checkPinchingPoint(hand?: Hand): Point2D | undefined {

if (hand == null) {

return undefined;

}

const indexFingerKeypoint = hand.keypoints[4];

const thumbKeypoint = hand.keypoints[8];

const distance = euclideanDistance(indexFingerKeypoint, thumbKeypoint);

if (distance <= thresholdForPinchingGesture) {

const x = (indexFingerKeypoint.x + thumbKeypoint.x) / 2;

const y = (indexFingerKeypoint.y + thumbKeypoint.y) / 2;

return { x, y };

}

return undefined;

}

With the code we have so far, we are able to record drawings when doing a pinching gesture by storing the return values of the checkPinchingPoint in an array.

But the array would easily fill up with gibberish over time, leaving no room to estimate a gesture from the points.

Say, you draw a ✔️ symbol and then want to draw another one, or you drew random things.

Therefore, we implement a mechanism with the following responsibilities:

- Reset the point array after a certain amount of time of no input, e.g. 5 seconds after the last hand input.

- Keep track of individual strokes, e.g. differentiate between the strokes of the ⏸️ symbol.

- Interpolate individual strokes to get a clean line.

- Notify observers when the timeout is over and send the interpolated points.

Everything mentioned above is packet into the MultiStrokeGestureTracker.ts file, which I’m not explaining further in this post.

Linear interpolation of the strokes improves the gesture recognition and makes the system more stable against outliers, e.g. inaccurate strokes/drawings or framerate drops.

To summarize the MultiStrokeGestureTracker, it takes in points of the pinching gesture and spits out an array with interpolated points with an index which corresponds to the stroke.

Once the timeout of 1.5 seconds runs out (and no point was added) it cleans the point array and starts from scratch collecting points.

Now we put together the things implemented above by creating a new method which

- runs the pinching gesture detection on every hand update,

- and adds the pinching point to the multi-stroke gesture tracker.

private onHandGesture(hands: HandInput): void {

const primaryHand = hands.find(h => h.handedness === 'Right');

if (primaryHand == null) {

return;

}

const pinchingPoint = this.checkPinchingPoint(primaryHand);

if (pinchingPoint == null) {

this.multiStrokeGestureTracker.startNewStroke();

} else {

this.multiStrokeGestureTracker.addPoint(pinchingPoint);

this.notifyPinchingPointObservers(pinchingPoint);

}

}

By modifying the callback code in Listing 1 we put everything together:

private async listenToWebcamFeed() {

const hands = await this.detector!.estimateHands(this.videoStream, { flipHorizontal: true });

// Check for pinching gesture + multi-stroke tracker.

this.onHandGesture(hands);

// Recursively call listenToWebcamFeed to create a loop.

window.requestAnimationFrame(() => { this.listenToWebcamFeed() });

}

This concludes the third step, next up:

Part 3: Recognize symbols

Once we have the drawing represented by a point cloud stored in an array, it is time to run an algorithm to detect predefined symbols.

The $Q Super-Quick Recognizer from depts.washington.edu (last accessed 2022.09.02) is exactly what we need.

Citing from its website:

The

$Q Super-Quick Recognizeris a 2-D gesture recognizer designed for rapid prototyping of gesture-based user interfaces, especially on low-power mobiles and wearables. It builds upon the$P Point-Cloud Recognizerbut optimizes it to achieve a whopping 142x speedup, even while improving its accuracy slightly.$Qis currently the most performant recognizer in the$-family. Despite being incredibly fast, it is still fundamentally simple, easy to implement, and requires minimal lines of code. Like all members of the$-family,$Qis ideal for people wishing to add stroke-gesture recognition to their projects, now blazing fast even on low-capability devices.

A demo application on the website showcases the algorithm’s capabilities:

The $Q Recognizer works by measuring the similarity of new observations (such as the drawing in Figure 2) to already known point clouds and returns closest matching gesture.

As it is based on the $P algorithm, the following listing formalizes the coarse workings of the algorithm:

Listing 2: Pseudocode of $P’s matching strategy from the paper (last accesses 2022.09.02).

n <- 32

score <- infinity

for each template in template do

NORMALIZE(template, n) // should be pre-processed

d <- GREEDY-CLOUD-MATCH(points, template, n)

if score > d then

score <- d

result <- template

return (result, score)

The authors of the paper provide a Javascript (last accessed 2022.09.02) implementation of the $Q Recognizer.

As the project is writting in the Typescript language, and by consulting the implementation of github.com/wcchoi/dollar-q, I didn’t hesitate and ported the code with slight adaptions.

The implementation provides the following interface:

The

constructorwhich takes predefined gestures (which should be recognized) as arguments and preprocesses them:constructor(predefinedGestures: PointCloud[]) { for (let i = 0; i < predefinedGestures.length; i++) { const gesture = predefinedGestures[i]; const normalizedPoints = RecognizerUtils.normalize(gesture.points, this.origin, false); this.pointClouds.push({ ...gesture, points: normalizedPoints }); } }A

recognizemethod to match a point-cloud to one of the defined gestures, returning the result in the form of:{ "name": string, // Name of the matching gesture. "score": number // The score (or distance) to the gesture. }

Integrate the $Q Recognizer into the project by creating a new instance in the GestureInputServices constructor.

Then implement the MultiStrokeGestureTracker’s onData callback method and apply the recognizer on the point cloud:

/**

* Callback function of the MultiStrokeGestureTracker when a gesture is seen as "completed".

*/

public onData(points: Point[]): void {

const result = this.recognizer.recognize(points);

const recognizedGesture: RecognizedGesture = {

confidenceScore: result.score,

gesture: mapPredefinedGestureToGesture(result.name)

};

// TODO: Send player commands based on the result.

}

The only step left is to act on recognized gestures and send appropriate player commands.

Part 4: Send player commands

The VideoPlayer.tsx is a wrapper class around the HTML video player and provides the following interface:

play: continue playback.pause: pause playback.

In the background, the GestureToPlayerMapperService.ts file manages the web player’s state with a state machine:

The GestureToPlayerMapperService listens to data from the GestureInputService.ts class and sends the appropriate player command.

Conclusion

By utilizing a tensorflow model for hand posture estimation (mediapipe/hands) and the $Q Super-Quick Recognizer algorithm to recognize symbols,

we are able to combine these two methods and create a simple hand-gesture interface for a web player in a matter of hours.

The approach does not require model training and provides a basic example on how someone can control a web player.

The $Q algorithm is easily extensible with additional gestures, and therefore allows to adapt the demo application to a variety of problems.